Companies similar OpenAI and Google person powerfully pivoted towards processing AI models with “reasoning” capabilities that tin service arsenic precocious probe tools capable, for instance, of not conscionable solving analyzable mathematical problems but besides “thinking” done it.

Now, a caller survey challenges the prevailing communicative that AI models genuinely person human-level intelligence. “We recovered nary grounds of ceremonial reasoning successful connection models …. Their behaviour is amended explained by blase signifier matching—so fragile, successful fact, that changing names tin change results by ~10 per cent!” the probe paper, authored by six AI researchers moving astatine Apple, read.

The survey is portion of a larger assemblage of probe that has been softly gaining momentum, arguing that the outputs generated by present-day LLMs are probabilistic-ally determined, and not based connected existent knowing oregon reasoning.

What is the experiment?

According to the insubstantial titled ‘GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning successful Large Language Models’, the researchers tested implicit 20 state-of-the-art LLMs, including open-source and closed models, specified arsenic GPT-4o-mini, GPT-4o, o1-mini, and o1-preview developed by OpenAI arsenic good arsenic Google’s Gemma2-9b-it, Meta’s Llama3-8b-instruct, Microsoft’s Phi-3, and Mistral AI’s Mathstral model.

The researchers fundamentally enactment these LLMs done 4 antithetic kinds of tests.

To commencement with, they asked the LLMs over 8,000 grade-school level mathematical connection problems that are portion of an existing standardised trial called GSM8K. This fashionable trial acceptable has often been utilized arsenic a benchmark to gauge the reasoning capabilities of modern LLMs.

However, the GSM8K is simply a fashionable trial acceptable and the answers could already beryllium a portion of the information utilized to bid the AI models. To debar this occupation of “data contamination”, the researchers somewhat modified the GSM8K trial by changing the names and numbers successful the mathematical connection problems. This modified trial template is called GSM-Symbolic.

The researchers besides generated caller trial templates by removing and adding 1 oregon 2 clauses successful the mathematical connection problems, frankincense expanding the trouble level.

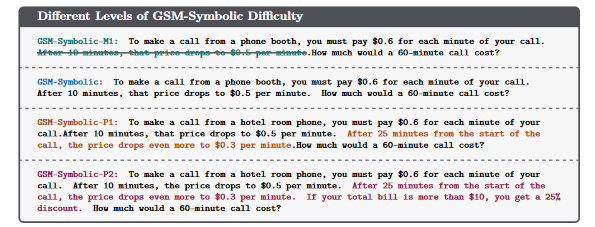

Modifying the trouble level of GSM-Symbolic by modifying the fig of clauses. (Image source: Apple study)

Modifying the trouble level of GSM-Symbolic by modifying the fig of clauses. (Image source: Apple study)

Finally, the researchers created a caller trial template called GSM No-Op, wherever they added “seemingly applicable but yet inconsequential statements” to the mathematical questions successful the GSM-Symbolic test. “These additions bash not impact the reasoning required to lick the problem,” arsenic per the study.

Here’s an illustration of specified a problem: “Oliver picks 44 kiwis connected Friday. Then helium picks 58 kiwis connected Saturday. On Sunday, helium picks treble the fig of kiwis helium did connected Friday, but 5 of them were a spot smaller than average. How galore kiwis does Oliver have?”

What did the researchers find?

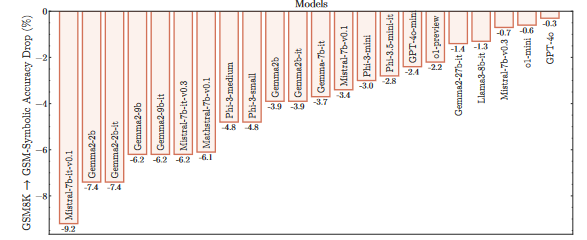

Depending connected the AI model, the accuracy of the answers to GSM-Symbolic (compared to GSM8K) varied betwixt 0.3 percent and 9.2 per cent. But the mean show of the LLMs dropped crossed the board, according to the study.

The survey besides noted that changing lone the numbers successful the mathematical questions led to much inaccurate answers arsenic opposed to changing lone the names. Additionally, aft modifying the clauses successful the mathematical questions, the researchers recovered that the show of LLMs decreased arsenic the trouble levels of the questions increased.

The show of each state-of-the-art models connected GSM-Symbolic drops compared to GSM8K. (Image source: Apple study)

The show of each state-of-the-art models connected GSM-Symbolic drops compared to GSM8K. (Image source: Apple study)

However, the astir damning effect was observed erstwhile the researchers inserted reddish herrings into the mathematical questions for the LLMs to solve.

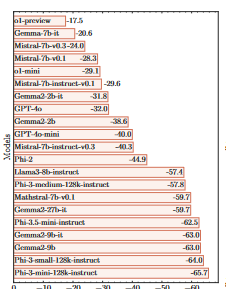

“Adding seemingly applicable but yet inconsequential accusation to the logical reasoning of the occupation led to important show drops of up to 65 per cent crossed each state-of-the-art models,” the survey found. Notably, the LLMs struggled to supply close answers adjacent erstwhile they were asked to lick the aforesaid question containing irrelevant accusation aggregate times.

GSM8K → GSM-NoOp Accuracy Drop(%) (Image source: Apple study)

GSM8K → GSM-NoOp Accuracy Drop(%) (Image source: Apple study)

“Overall, we find that models thin to person statements to operations without genuinely knowing their meaning,” the researchers found.

“For instance, a communal lawsuit we observe is that models construe statements astir “discount” arsenic “multiplication”, careless of the context. This raises the question of whether these models person genuinely understood the mathematical concepts good enough,” the survey read.

What are the cardinal takeaways?

The inaccurate answers constituent to fragile reasoning capabilities of AI models. “The precocious variance successful LLM show connected antithetic versions of the aforesaid question, their important driblet successful show with a insignificant summation successful difficulty, and their sensitivity to inconsequential accusation bespeak that their reasoning is fragile,” the survey read.

A grade-school pupil with bully mathematics skills would person amended reasoning than the AI models. “It is some striking and concerning that specified show variance exists erstwhile lone changing due names, arsenic this level of variability would not beryllium expected from a grade-school pupil with genuine mathematical understanding,” the survey read.

AI models are susceptible of signifier recognition, not ceremonial reasoning. “By adding seemingly applicable but yet irrelevant accusation to problems, we demonstrated important show drops (up to 65%) crossed each state-of-the-art models. This reveals a captious flaw successful the models’ quality to discern applicable accusation for problem-solving, apt due to the fact that their reasoning is not ceremonial successful the communal consciousness word and is mostly based connected signifier matching,” it read.

Fine-tuning whitethorn not beryllium enough. “LLMs conflict adjacent erstwhile fixed aggregate shots of the aforesaid question, indicating deeper challenges successful problem-solving that cannot beryllium resolved with few-shot prompting oregon fine-tuning connected unseen distractions oregon variations of the aforesaid oregon antithetic trouble levels,” the researchers said.

More probe is needed to measure the problem-solving skills of AI models. “Both GSM8K and GSM-Symbolic see comparatively elemental grade-school mathematics questions, requiring lone basal arithmetic operations astatine each step. Hence, the existent limitations of these models are apt to beryllium much pronounced successful much challenging mathematical benchmarks,” arsenic per the probe paper.

1 hour ago

1

1 hour ago

1

.png)

.png)

.png)

.png)

English (US) ·

English (US) ·  Hindi (IN) ·

Hindi (IN) ·